Bug triage can be messy. Between classifying incoming issues, identifying affected components, and deciding priority, it's a job that demands speed and accuracy, something AI is surprisingly good at, with the right structure.

In this post, I'll walk you through how I built a multi-agent system using Azure OpenAI to streamline bug triage, using a virtual team of AI agents working together: a Classifier, a Fix Recommender, and a Reviewer.

Why Multi-Agent?

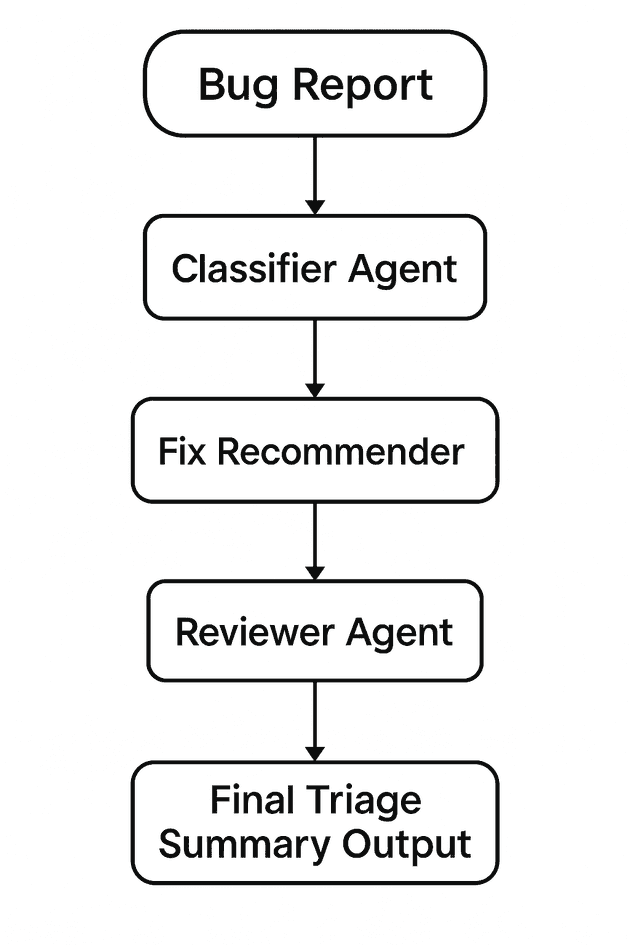

Multi-agent systems are gaining traction because they mirror how real teams operate. Each agent has a specialized role and collaborates to solve a problem. In this setup, agents process bug reports collaboratively, each focusing on a specific task in the triage pipeline.

Key benefits of this approach:

- Specialized Expertise: Each agent can be optimized for its specific task

- Reduced Hallucination: Cross-validation between agents helps catch errors

- Scalable Workflow: Easy to add new agents or modify existing ones

- Consistent Output: Structured JSON responses ensure reliable data flow

- Human-like Process: Mimics real-world team collaboration patterns

Architecture Overview

Getting Started

To implement this system in your own project:

-

Prerequisites

- Azure subscription with OpenAI access

- .NET 8.0 SDK

- GitHub repository for issue tracking

-

Setup Steps

# Clone the template repository git clone https://github.com/xenobiasoft/ai-bug-triage # Install dependencies dotnet restore -

Configuration

- Set up Azure OpenAI endpoints in

appsettings.json - Configure GitHub webhook for automatic bug report processing

- Adjust agent prompts in

Agents/directory

- Set up Azure OpenAI endpoints in

Technical Implementation

The system is built using:

- Azure OpenAI GPT-4o (easily replaced with other models)

- C# .NET 8.0 for orchestration

- ASP.NET Core minimal API for ease of the demo, but an Azure Function would a better fit for production

Here's a simplified example of the agent orchestration:

public class BugTriageOrchestrator

{

private readonly IOpenAIClient _openAIClient;

public async Task<TriageResult> ProcessBugReport(string bugReport)

{

// Step 1: Classification

var classification = await _classifierAgent.Classify(bugReport);

// Step 2: Fix Recommendation

var recommendation = await _fixRecommenderAgent.Recommend(

bugReport,

classification

);

// Step 3: Review

var review = await _reviewerAgent.Review(

bugReport,

classification,

recommendation

);

return new TriageResult(classification, recommendation, review);

}

}The final message structure for the orchestration uses the following JSON structure:

{

"bugReport": "string",

"classification": {

"classification": "string",

"confidence-score": "number",

"justification": "string"

},

"recommendation": {

"affected-areas": ["string"],

"confidence-score": "number",

"justification": "string",

"recommendation": "string"

},

"review": {

"approved": "boolean",

"confidence-score": "number",

"justification": "string"

}

}The Agents

1. Classifier Agent

- Parses incoming bug reports

- Assigns classification (UI, Backend, Performance, etc.)

- Provides a confidence score and rationale

2. Fix Recommender Agent

- Analyzes the issue

- Suggests related code modules or file paths

- Recommends possible root causes or recent related PRs

- Provides a confidence score

3. Reviewer Agent

- Evaluates the previous agents' responses

- Flags hallucinations or inconsistencies

- Provides a confidence score and rationale

Prompts and Coordination

Each agent was given a system prompt:

Classifier Agent:

"You are a senior triage engineer. Read the bug report and classify the bug. Return a JSON object with classification, justification, and confidence-score between 0 and 1."

Fix Recommender Agent:

"Based on this bug report and its classification, suggest likely modules, files, or components. Include a brief justification. Return a JSON object with affected-areas, justification, recommendation, and confidence-score between 0 and 1."

Reviewer Agent:

"Review the bug report, classification, and fix recommendation. Flag vague or hallucinated suggestions. Return a JSON object with approved, justification, and confidence-score."

I used a C# orchestrator to handle the call sequence and capture responses.

Example: Real Bug Report Walkthrough

I tested the agent's orchestration using postman with the following structure:

curl POST /api/bug-triage/

-H "Content-Type: application/json"

-d `{

"description": "The app crashes when uploading a large image from mobile Safari. It works fine in Chrome."

}`Bug Report:

"The app crashes when uploading a large image from mobile Safari. It works fine on Chrome."

Classifier Response:

{

"classification": "Frontend - Image Upload",

"justification": "The app crashes specifically when uploading a large image from mobile Safari, whereas it works fine on Chrome. This implies a compatibility issue between the app and mobile Safari.",

"confidence-score": 0.85

}Fix Recommender Response:

{

"affectedAreas": [

"components/ImageUploader.razor",

"services/UploadService.cs"

],

"justification": "The bug involves uploading, specifically on mobile Safari. These modules are responsible for handling image uploads.",

"recommendation": "Investigate the Mobile Safari compatibility module and the Image upload component to identify the specific code causing the crash on this browser. Test and implement a fix to ensure proper handling of image uploads from mobile Safari.",

"confidence-score": 0.85

}Reviewer Response:

{

"approved": true,

"confidence-score": 0.9,

"justification": "The components and justification are relevant and clearly tied to the browser-specific issue."

}Future Ideas

- GitHub integration: auto-label or comment on new issues

- Embedding search to suggest past similar bugs

- Human-in-the-loop validation before assigning bugs

- Add a "PM Agent" to summarize impact on roadmap

- Implement A/B testing for different prompt strategies

- Add support for multi-language bug reports

- Implement a fourth Fixer agent that creates a pull request with the implemented fix

Final Thoughts

This project gave me a hands-on look at how powerful and practical multi-agent AI systems can be, especially for tasks that mirror real team workflows. It's also a fun playground for prompt engineering and AI tool orchestration.

What other development processes could benefit from a virtual AI team? Let me know your ideas in the comments!